How to Build Fault Tolerant Microservice Using Spring Technology

Make Your Microservices More Resilient with Resilience4J and Spring

Have you received complaints from users for frequent high wait times for the pages to come back, and the website got an error after the long wait? After investigation, you found that the root cause was that some of the downstream systems were running a SQL, and it took a long time to finish. You might’ve figured there was nothing much to do since they were necessary dependencies, and you didn’t own the system.

Today’s discussion will be a good solution for a situation like this. We will discuss how your microservice can be more resilient and give end users a better experience when a service is not responsive.

Design Challenge

How can we design a highly fault tolerable microservice system? When you have a chain of dependencies between microservices, but one of the services is not responsive in synchronous communication, all upstream services will suffer and wait until the unresponsive service returns to normal. The issue spreads to the system because one unresponsive service means all services in the dependency chain get frozen. This adverse cascading effect could result from naive distributed system design or the overly optimistic mind of the system architect.

There are different ways to handle a situation like this, such as setting up a network timeout and limiting the number of outstanding client requests. But today, we will discuss what to do on the client side to make your microservice application more fault tolerant. In other words, when your microservice is a victim of a poorly written SQL in a remote application, how can you protect your service, maintain it still responsive, and isolate the unresponsive service to avoid the entire system crashing?

Solution

You can employ the Client-Side Resiliency pattern, including Circuit Breaker, Fallback, Bulkhead, and Client-side load balancing. The main goal of the design is to protect a client service and the entire system from unavailable or degraded resources.

We will cover the client-side load balancing in a future blog and focus on Circuit Breaker, Fallback, and Bulkhead today.

- Circuit Breaker: Control microservices for accepting or rejecting requests depending on the threshold configured for each service. The threshold condition consists of two things. One is to trip the circuit after a certain number of requests fail. And another one is to start receiving requests again after a certain number of successes.

- Fallback: Handle when a failure occurs, such as redirecting to an error page.

- Bulkhead: Assign a separate set of thread pools in the client service for each remote resource to prevent depletion of client resources.

There are three effects we should be able to see.

- Fail fast: A client service should stop waiting for the response from a poorly performing resource as soon as the wait time exceeds the threshold.

- Isolation of degradation: A client service should be able to send requests to all services in the system normally except an unavailable service.

- Recover seamlessly: A client service will wait until the remote resource is recovered and send requests after the remote resource is entirely ready.

Implementation

Today, I will use Resilience4J to show how to implement Circuit Breaker, Fallback, and Bulkhead. Netflix Hystrix is another option, and it would be worth exploring if you want because it inspired the Resilience4J.

The high-level step of the implementation is

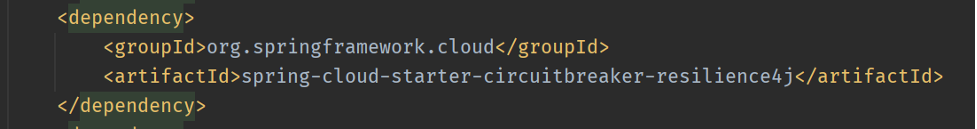

- Update pom.xml to add the Resilience4J as shown in Figure-1

- Put annotations to mark a method for the circuit breaker, fallback, and bulkhead

- Configure the circuit breaker and bulkhead in a property file

Figure 1: Resilience4J in pom.xml

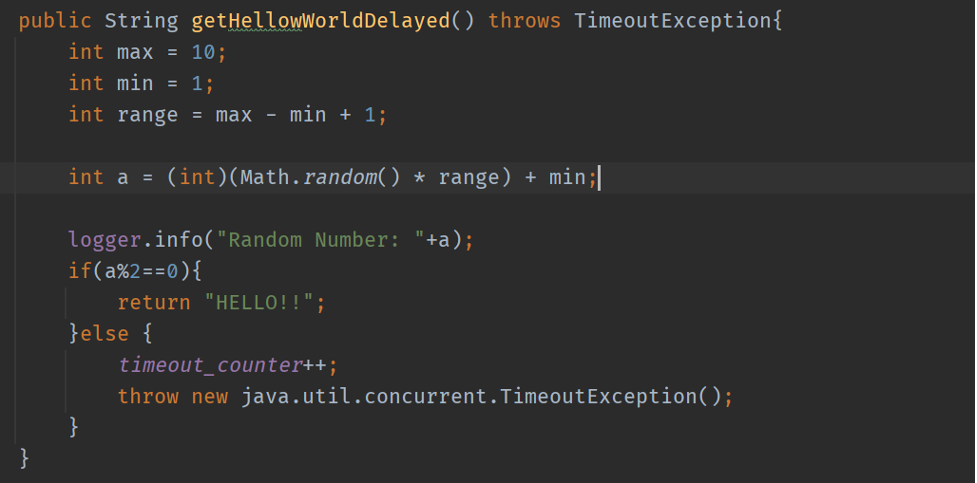

As shown in Figure 2, I created the getHellowWroldDelayed method to mimic a situation when a remote resource performs poorly. And the method will throw a TimeoutException if an odd number gets generated from the Math.random(). And it will return a text when an even number gets generated.

Figure 2: A method that intentionally throwing TimeoutException for demonstration purpose

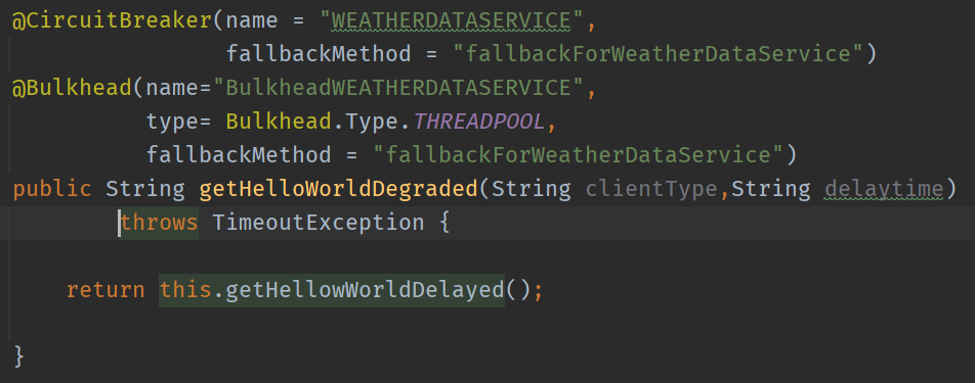

Figures 3 and 4 show that I annotated the getHelloWorldDegraded method with CircuitBreaker and Bulkhead. And it calls the getHellowWorldDelayed method, which pretends to invoke a remote resource. The name in @CircuitBreaker must match the one you set in the application.yml. I provided the fallback for any failure.

The Bulkhead annotation in Figure 4 shows that it’s configured as Thread Pool and has the same fallback method as the Circuit Breaker.

Figure 3: The getHelloWorldDegraded method annotated with CircuitBreaker and Bulkhead

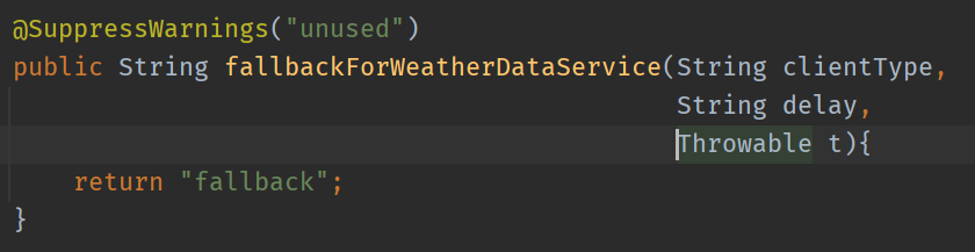

Figure 4: Fallback method for the circuit breaker configured for the getHelloWorldDegraded method.

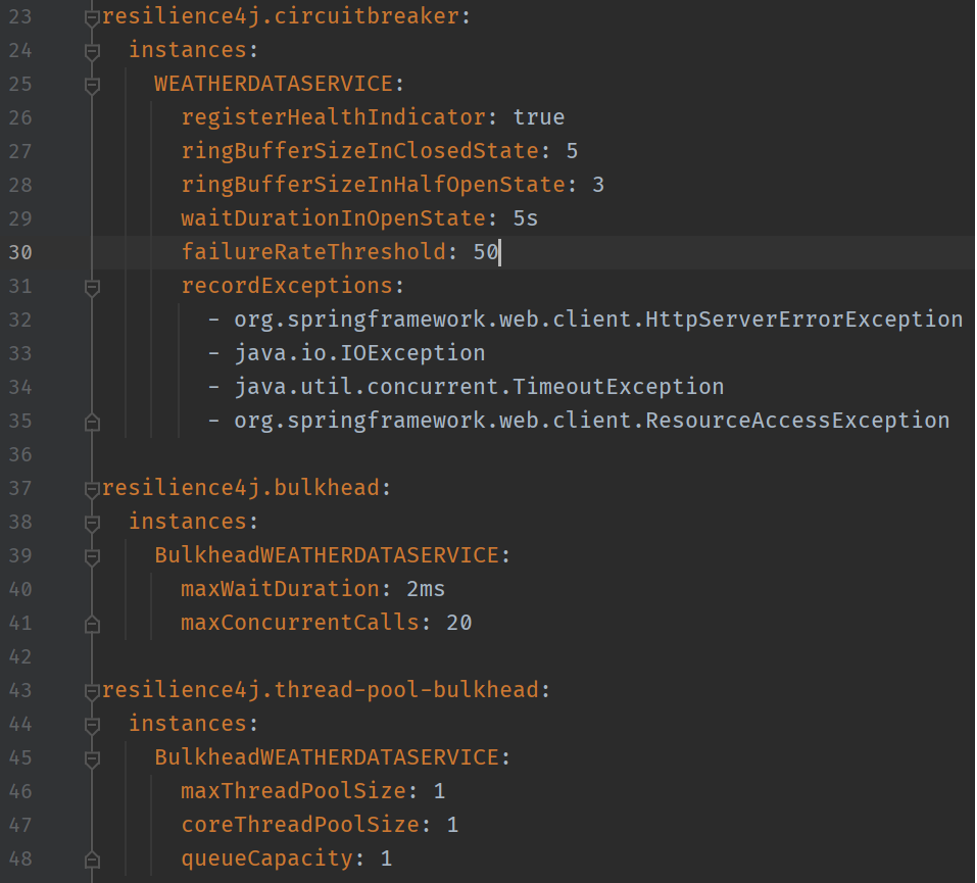

As shown in Figure 5, I configured the circuit breaker to react to TimeoutException by adding it to the recordException section in my property file. I set up the failureRateThreshhold to trip the circuit if the actual failure rate is higher than this number. In other words, the circuit will be the OPEN state when the failure rate is higher than this threshold. The waitDurationInOpenState is a duration that the circuit breaker should wait before it changes to the HALF-OPEN state. Once it’s the HALF-OPEN state, if the failure rate is lower than the failureRateThreshhold, the circuit will become the CLOSED state.

Another configuration we should pay attention to is bulkhead related. Resilience4J provides two options for the bulkhead configuration, Semaphore and Thread Pool. Semaphore limits the number of concurrent calls to a service, and Thread Pool separates thread pools for each resource and assigns the maximum number of threads to each pool. This way, when there is no available thread for a bad resource, it won’t impact threads in another pool for another resource.

Figure 5: Configuration for Circuit Breaker in application.yml

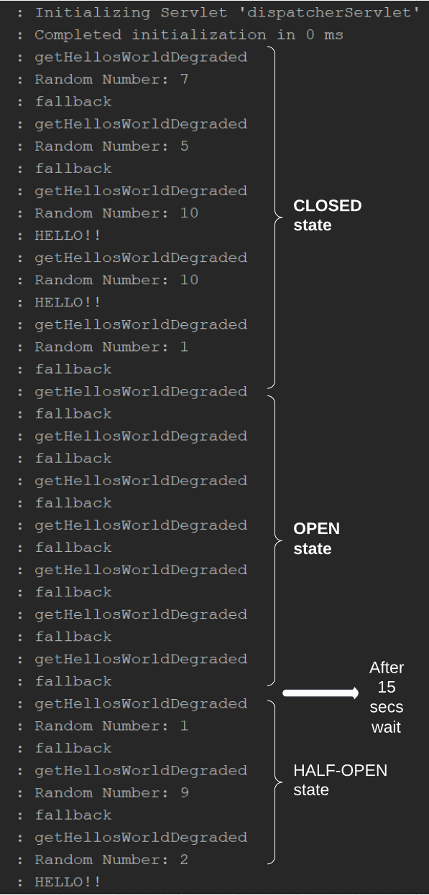

Figure 6 shows how the circuit breaker reacts to the TimeoutException according to the configuration. When I start hitting the example application in the CLOSED state, you can see the random numbers get printed, and the fallback message gets printed for odd numbers. When the circuit breaker detects the failure rate is higher than the threshold, it becomes the OPEN state, and there is no random number printed because it stops calling the fake remote source. After 15 seconds, it calls the fake resource again as I hit the application, and you can see the random numbers. As I said earlier, if the failure rate is lower than the threshold, it will become the CLOSED state.

Figure 6: Log of the example application

Conclusion

Today, we discussed what Resillence4J offers for Client-Side Resilience pattern implementation and how that can help your services to become more fault-tolerant and resilient.