Load Multiple json Files into a Database Table Using KNIME

I was recently tasked with loading a lot of historical json files into a database. I wanted to use KNIME to create a repeatable workflow to address this issue since this would be need to be an ongoing process after the initial loading of data was complete.

Here is the workflow I created (Figure 1).

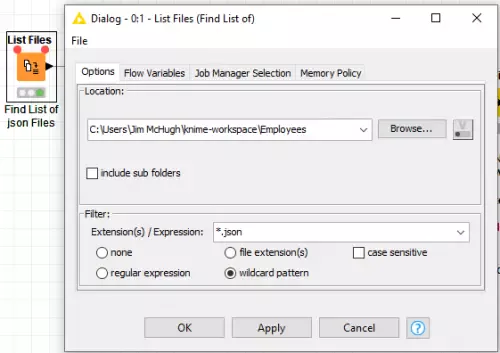

Let’s take a look at the component steps for a better understanding of each node and why they are used. I started by reading a list of json files contained in a specified directory (Figure 2.). It is obvious that this is needed for the historical load but daily/weekly/monthly/quarterly/yearly loads can fail and you always want to ensure that all files are captured and processed.

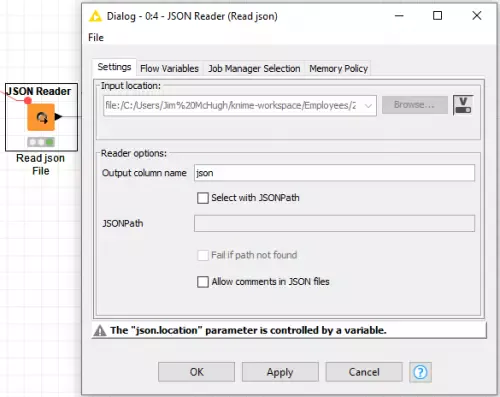

Now that we have our list of files we will put this into a loop for processing. The first node we come to in the loop is the json Reader node (Figure 3.). We pass the file path to the json Reader node from the list we created in the previous step as a flow variable.

Here is where the fun begins. Now that we have read the json file into memory we need to break it into pieces so we can capture the data as columns for our database table. Step 1 is to break the json into columns (Figure 4.). Please note that you should prefix the json path with the header of the information you wish to load.

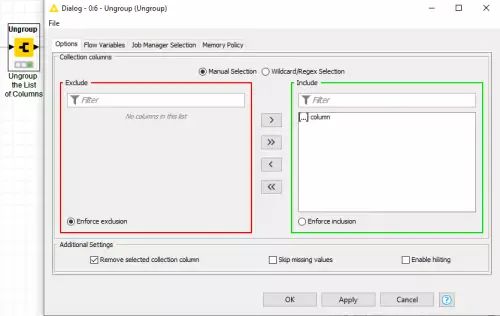

Next, we ungroup the columns (Figure 5.).

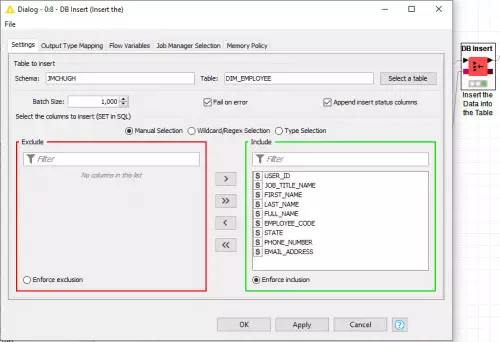

Now that we have the json rows broken out into columns we assign the json column to the database column (Figure 6.).

The output of this node process will contain only the rows of data to insert into your database table (Figure 7).

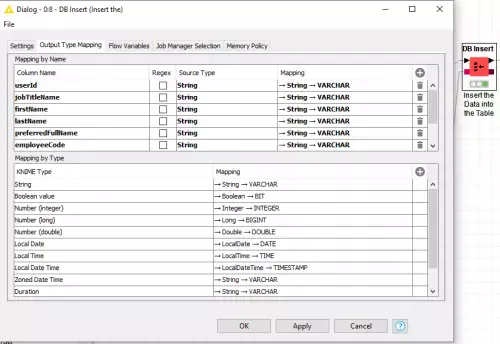

To ensure the success of the DB Insert node verify that all of the data type conversions are correct (Figure 8.).

The first file has been loaded into the database and the loop will continue until the list of files to be processed has been exhausted.

As you can see KNIME provides excellent ETL functionality that is easy to comprehend and use. I highly recommend this software.