Late Arriving Dimensions

In my last post I discussed late arriving facts, and although it is slightly annoying to receive delayed transaction data, the impact on the ETL and accuracy of the data in data warehouse is minimal. As we will discuss in this blog post, this is not the case for late arriving dimension (early arriving fact) data.

As I have discussed throughout this blog series on designing and loading dimensional data warehouses, we always load data in the following order: lookup tables, then dimensions, and finally facts. But what happens when you get fact data before the dimension data arrives or there is a backdated change in the dimensional data? These late arriving dimensions may be generated by a known business process but these late arriving dimensions can cause a significant challenge to the accuracy of the data and test the skill and patience of the ETL developers and testers. Let’s take a look at the challenge the delay is receiving the dimensional data presents and the options we have to resolve these challenges.

The most common example of late arriving dimensions data can be found in the medical insurance industry. For this example, we will assume that the employee was onboarded and had an accident before all of the forms could be completed, transferred to and processed by the insurance company. The hospital will create a medical claim record to be paid by the insurance company, but the insurance company does not yet have a person to associate the claim. A few weeks later the employer forwards over the completed employee forms and the DIM_INSURED record is created for the employee. What happens in the weeks between the claim and the receipt of the insurance information for the employee?

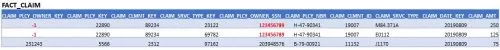

Promote the Facts Using the “Unknown” Dimensional Record to Production

Based on Dimensional Modeling standards we should associate a negative number (eg. -1 = UNKNOWN, -2 = N/A, -3 = Not Provided, etc.) to the fact key when we cannot find a corresponding dimensional key for the natural ID provided by the source system for the fact. At this point we may wish to publish the fact record at this point, even though we do not have a dimensional record to associate with the transaction. I recommend having a process that tries to cleanup these fact records and find a dimensional record to associate with the transaction. There are a couple of ways to do accomplish this task including: leave the fact record as is, update the current fact record inline, or create a new fact record and deprecate the old fact record to capture the historical change and increase accuracy in reporting. The ultimate solution will be based on your customer’s requirements.

Figure 1

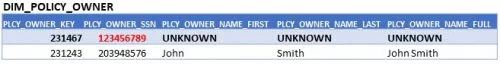

Create a Dimensional Record Using Available Data and Promote Limited Dimension and Fact Records to Production

This is an interesting option and will require additional ETL logic to address this solution. What we do here is we create a dimensional record with the limited information we are provided in the fact record. In most cases this is limited to the natural key. Therefore, we need to ensure there are no other required dimensional attributes which would cause an error on the creation of the record. I recommend the use of a flag to indicate the dimensional record is incomplete. Based on this flag I can update the dimensional record when the late arriving data arrives. All other times, when the dimensional record is created through the dimensional record processing the flag is set to indicate the record is complete and therefore should not be updated.

In both of the above scenarios the customer wants to get the fact out to production so the transaction can be documented as soon as possible with the knowledge the dimensional data will arrive later and may cause changes in the values of some of the reports.

Figure 2

Don’t Promote the Facts with Missing Dimensional Data to Production

After landing the fact data and staging the transactions we could look to see if there are records with missing key values for the provided natural keys and decide that we don’t to process the record until all of the key values can be found. To accomplish this, we may have a flag in the staging fact table that we update when all of the key values in the fact are > 0. (Remember we always use negative numbers for keys to notate some issue with a dimension.) At the end of the process we move the records flagged as “processed” to production and then delete those records from the staging tables, leaving the records needing additional processing in staging for the next ETL run where the process repeats until all dimensional records are found and the record meets the success criteria and can be moved to production and the record meets the success criteria and can be moved to production.

Figure 3

These are relatively simple scenarios where we only receive a single late dimensional record, but what happens when we receive multiple historical records for a dimension? Assuming we are dealing with a Type II SCD, we are creating a significant challenge for the ETL developer and tester. So, when I come across these situations, I always ask the customer the following questions: Is there a way to avoid the tardiness of the dimensional data? Do they understand the impact of changing historical dimensional data on their reporting system? And finally, do they understand the cost of the implementation of this requirement (Is the juice worth the squeeze)?

When this requirement is absolutely necessary, we need to assess if we want the complexity of changing multiple dimensional records and all of the associated fact records to be automated and done procedurally or should this be handled by an off-line process? We need to remember that this decision will also have an impact on all future development as the logic to address the historically changing dimension will need to be created for any fact associated with that historically changing dimension in the future.

As you can see late arriving dimensions provide a level of complexity to the dimensional model. In the first three cases above we can programmatically address the issue with relative ease. The final challenge of multiple late arriving dimensional records significantly raises the complexity of an automated solution.